AI Misinformation Storm Hits US Politics: 2025 Election at Major Risk

Concerns have been widely expressed by experts and officials about the significant impact of AI-driven disinformation on US elections. Today we will discuss about AI Misinformation Storm Hits US Politics: 2025 Election at Major Risk

AI Misinformation Storm Hits US Politics: 2025 Election at Major Risk

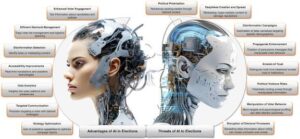

Artificial Intelligence (AI) has rapidly transformed many aspects of modern life, from business and healthcare to entertainment. But one of the most profound—and potentially dangerous—impacts is unfolding in the political arena. As the United States approaches the 2025 election cycle, experts are increasingly warning of an “AI misinformation storm” that threatens to undermine democratic processes, erode trust, and distort voter behavior.

In 2025, generative AI tools are no longer futuristic fantasies: they are powerful instruments in the hands of malign actors. Deepfakes, voice cloning, AI-driven bots, and even “disinformation-as-a-service” platforms are combining to create a perfect storm of falsehoods that could destabilize U.S. politics like never before.

This article explores how AI-driven misinformation is shaping U.S. politics in 2025, the nature of the risk, how bad actors are exploiting AI, the consequences for democracy, and what can be done to mitigate the threat.

The Nature of the AI Misinformation Threat

1. Deepfakes and Voice Cloning

One of the most striking risks posed by AI is deepfake technology — realistic audio, video, or images that misrepresent real people saying or doing things they never did. These can be weaponized to deceive voters.

Experts have raised alarm bells that in future election cycles, generative AI could be used to craft damaging messages — for instance, digitally cloned voices of political figures used in robocalls. Such content is pernicious precisely because it plays on trust: hearing a familiar voice on the phone can be far more emotionally resonant than reading a text or seeing an image.

2. AI-Driven Disinformation Campaigns

Beyond impersonation, AI is enabling scalable disinformation operations. Generative models can produce false — but very plausible — narratives, complete with fabricated “evidence,” imagery, and even summaries of “reports.” These tools allow malign actors to produce large volumes of content rapidly, making it easier to saturate the information space.

Such misuse of AI leads to erosion of trust, intensifies polarization, and undermines confidence in democratic institutions. As AI-generated disinformation becomes more sophisticated, distinguishing between fact and fabrication becomes harder, giving rise to the “liar’s dividend”: real evidence can be dismissed as fake because people no longer know which evidence to trust.

3. Malicious AI Swarms

Recent research underscores an even more insidious evolution of the threat: malicious AI swarms. These are distributed networks of AI agents that coordinate to manipulate online discourse, engage in persuasion, and overwhelm detection systems.

Such swarms can produce consistent streams of disinformation, simulate genuine grassroots movements, and execute A/B testing of narratives to optimize influence. This isn’t just about a single deepfake video—instead, it’s about a coordinated digital ecosystem working constantly to shape public opinion. The result: fragmented realities, manipulated consensus, and potentially voter suppression or mobilization in carefully targeted communities.

4. Sleeper Social Bots

Related to AI swarms are sleeper social bots — AI-driven bots that behave like human users over time and gradually influence conversations. These bots adapt their personalities, engage in political discourse, and spread misinformation without triggering immediate suspicion. Because they mimic human behavior, they are especially difficult for traditional moderation systems to detect.

Who Is Behind the AI Misinformation Campaigns?

Foreign Adversaries

The 2025 AI disinformation threat is not limited to domestic actors. Foreign adversaries, particularly nation-states, are already operating sophisticated AI-driven disinformation campaigns. Some groups have used AI to generate content and run networks of websites pushing disinformation in the U.S. political landscape. These operations are strategic, long-term campaigns designed to influence public opinion and destabilize democratic institutions.Domestic Political Actors

Political campaigns within the U.S. are also experimenting with AI. While not all uses are malicious, the risk is that some campaigns could exploit AI’s capacity for deception. Survey data shows that although Americans broadly oppose deceptive uses of AI in politics, the incentives for parties to use it remain strong when the perceived electoral advantage is high.Commercial Disinformation Services (DaaS)

Another emerging threat is Disinformation-as-a-Service (DaaS) — companies or groups offering AI-powered influence operations for hire. These services can produce tailored disinformation campaigns for specific audiences, geographic areas, or demographic segments. This commoditization of AI misinformation means even non-state actors or political operatives with limited resources may gain access to powerful influence tools.

Why the 2025 Election Is Especially Vulnerable

Several converging factors make the 2025 U.S. election particularly susceptible to an AI misinformation storm:

Rapidly Evolving AI Technology

AI models are becoming more capable, more accessible, and cheaper to run. This democratization allows more actors—state and non-state—to experiment with and deploy AI tactics. As generative models grow more sophisticated, so too do the disinformation campaigns they enable.Information Overload & Trust Erosion

The modern information ecosystem is already saturated. Adding AI-generated content risks creating a signal-to-noise problem. This leads to a breakdown in trust: once people are unsure what’s real, they may disengage altogether or succumb to the “liar’s dividend.”Reduced Disinformation Policing

Regulatory and moderation frameworks are struggling to keep up. Platforms are scaling back trust and safety resources, while governments are still catching up on how to respond to AI-specific threats.Highly Polarized Political Landscape

U.S. politics remains deeply polarized. AI-generated content can be tailor-made to stoke existing divisions, entrench conspiracy narratives, or intensify distrust. This polarization amplifies the impact of disinformation, making messages more resonant and harder to counter.Election Manipulation Tactics Already in Motion

Incidents are already being documented: for example, fraudulent AI-generated robocalls impersonating political leaders to discourage voters. Meanwhile, foreign AI disinformation campaigns are scaling rapidly.

Consequences for Democracy

The implications of an AI misinformation storm in U.S. politics are deep and multifaceted:

1. Erosion of Democratic Trust

When misinformation becomes ubiquitous and its origin opaque, citizens may begin to distrust not just media, but institutions themselves. If voters believe that any political information might be fabricated, the foundation of informed decision-making cracks. This erosion of trust can lead to a democratic legitimacy crisis.

2. Manipulated Voter Behavior

AI-enabled disinformation can be tailored, targeted, and persistent. It can reinforce echo chambers, spread false narratives, and masquerade as grassroots movements. Such campaigns may shift voter perceptions, suppress turnout in some communities, or mobilize others artificially.

3. Accountability Undermined

The “liar’s dividend” problem is particularly worrying. Fabricated content can cast doubt on real evidence, weakening accountability. Political figures, corporations, or foreign actors could deflect criticism by claiming it’s all AI-manipulated content.

4. Disinformation Arms Race

As bad actors get more sophisticated, democratic societies may respond with regulation, fact-checking infrastructures, or AI-detection tools. This could spark an escalation, with more advanced generative models, subtle bots, and clandestine campaigns, creating a continuous arms race with consequences for public trust and governance.

Current Defensive Measures and Their Limits

Policy and Regulation

Federal Action: Steps have been taken to curb AI-generated robocalls and deceptive content.

International Sanctions: Certain foreign groups using AI in election interference have been sanctioned.

Intelligence Monitoring: Agencies track and counter foreign AI-backed disinformation campaigns.

AI Regulation Proposals: Think tanks are pushing for stronger oversight mechanisms, including transparency rules and watermarking synthetic media.

Limitations: Policies often lag behind technology; bad actors operate across borders; and legal enforcement is challenging.

Technical Measures

AI Detection Tools: Systems exist to detect deepfakes, synthetic voices, and bot-generated content, but sophisticated bots and AI swarms are difficult to catch.

Platform Moderation: Social media companies have trust and safety teams, but moderation alone can’t scale to handle highly automated and sophisticated AI-driven campaigns.

Public Education: Civil society organizations emphasize media literacy, helping citizens recognize synthetic content, though educating millions remains a herculean task.

What More Needs to Be Done

To counter the 2025 AI misinformation storm, a multipronged, proactive strategy is essential:

1. Strengthen Regulation and Governance

Mandate labeling or watermarking of generative content.

Integrate “persuasion-risk” testing in AI development.

Foster global cooperation to address cross-border disinformation.

Provide election commissions with resources to monitor AI-driven threats.

2. Bolster Technical Defenses

Develop real-time AI swarm detection systems.

Embed digital provenance in AI-generated outputs.

Create independent observatories to monitor AI influence.

Provide user tools to flag potentially synthetic content.

3. Promote Public Awareness & Resilience

Scale media literacy campaigns.

Use “pre-bunking” initiatives to inoculate the public against disinformation.

Encourage transparency in political communications regarding AI use.

4. Build Ethical Norms & Industry Accountability

Adopt industry codes restricting deceptive uses of AI in campaigns.

Support civil society monitoring of AI disinformation.

Implement simple reporting mechanisms for suspected synthetic content.

Challenges and Risks to Mitigation

Detection Arms Race: More sophisticated AI models may evade detection.

Resource Constraints: Smaller election boards may struggle to implement defenses.

Regulatory Fragmentation: Without global coordination, bad actors can exploit weak jurisdictions.

Free Speech Concerns: Over-regulation could limit legitimate uses of synthetic media.

Conclusion

The looming AI misinformation storm could be one of the most consequential threats to the integrity of U.S. elections in 2025. Unlike past waves of disinformation, this threat is automated, scalable, and precision-targeted. Actors—foreign and domestic—are already using AI to produce deepfakes, run bot networks, and coordinate disinformation campaigns.

The stakes are high: if left unchecked, AI-driven misinformation could erode trust in institutions, manipulate voter behavior, and weaken the foundatio

How useful was this post?

Click on a star to rate it!

Average rating 0 / 5. Vote count: 0

No votes so far! Be the first to rate this post.

About the Author

usa5911.com

Administrator

Hi, I’m Gurdeep Singh, a professional content writer from India with over 3 years of experience in the field. I specialize in covering U.S. politics, delivering timely and engaging content tailored specifically for an American audience. Along with my dedicated team, we track and report on all the latest political trends, news, and in-depth analysis shaping the United States today. Our goal is to provide clear, factual, and compelling content that keeps readers informed and engaged with the ever-changing political landscape.